This post will be a quick reference on how to configure an OCI AI Vision/Language REST API in ODA for the dialog flow REST component.

Before configuring anything in ODA you must have the proper permissions in OCI to use the API’s.

Granting Access to OCI AI services

Open the OCI Console for the tenancy used to provision your ODA instance and navigate to the Identity & Security > Policies page

Having selected the Root compartment, click Create Policy to open the policy editor. Fill in the form with the following details:

Name – AIServicesAccessPolicy

Description – Allow any users in the Tenancy to access the Language & Vision AI Services

Compartment – Confirm this is pointing to your root compartment. Tenancy Name -> Root

Set the Policy Builder’s Show Manual Editor toggle to ON

Paste the following Policy statement into the Policy Builder

allow any-user to use ai-service-language-family in tenancy

allow any-user to use ai-service-vision-analyze-image in tenancyClick Create and confirm the subsequent inclusion of the Policy Statements in the AIServicesAccessPolicy policy.

OCI AI Language

Open the ODA menu button on the top left, select Settings > API Services from the main menu

Click the + Add REST Service button to bring up the Create REST Service Dialog

Create a REST Service Resource for the OCI AI Language Service: Detect Language Model.

Endpoint:

https://language.aiservice.<DC>.region.oci.oraclecloud.com/20210101/actions/detectDominantLanguage

Where DC matches the home region to which you have provisioned your tenancy and the ODA instance.

eg: language.aiservice.ap-sydney-1.oci.oraclecloud.com

Click Create to create the initial REST Resource definition.

Supply Security credentials for the API call.

ODA REST Connector resources support several different credential types to authenticate to secured REST Services. The Oracle Cloud Infrastructure includes the “OCI Resource Principal” credential type. This allows a service to securely call another service, within the same tenancy, without the need to supply individual user credentials.

Set the Authentication Type to “OCI Resource Principal”

Within the Methods section, confirm that the POST Method is highlighted. If not already expanded, click on the Request Chevron (>) to expose the properties of the REST request.

Confirm that the Content-Type is defined as application/json

Copy the following JSON into the request’s “Body” definition as an example Payload

{"text": "Bom dia queria pedir uma pizza"}Note: You can click on the [Use Edit Dialog] to load the JSON document into a larger Editor window if there is insufficient space to edit the JSON within the field directly.

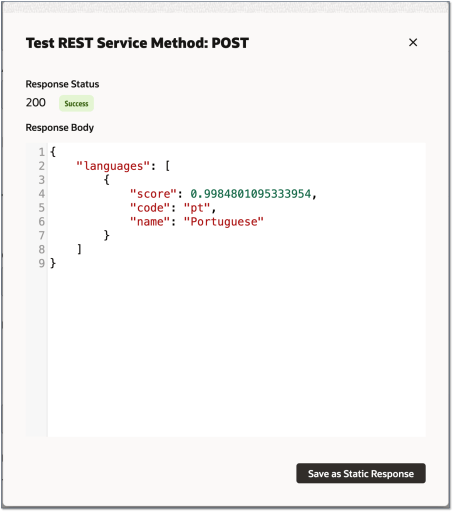

Test the validity of the REST Resource by Clicking on the Test Request button.

If you did not receive a 200 Status with the corresponding payload indicating that the supplied input was in Portuguese, close the dialog and:

- Confirm the validity of the properties for the REST service.

- Check if the OCI policies are configured correctly.

If the outcome was successful (Status 200), click Save as Static Response to save the response to be used as MOCK data if the service is not available as you build your conversation flow.

Note:

The above configuration was for the DetectLanguage endpoint. If you need other methods like the DetectSentiment, you would need to use those endpoints and also update the request payload accordingly.

OCI AI Vision

For the AI Vision, we will configure the AnalyseImage endpoint

All the steps are exactly the same as for the AI Language except for the endpoint and the payload.

Endpoint:

https://vision.aiservice.<DC>.oci.oraclecloud.com/20220125/actions/analyzeImage

Where DC matches the home region to which you have provisioned your tenancy and the ODA instance.

Set the Authentication Type to OCI Resource Principal

Within the Methods section, confirm that the POST Method is highlighted. If not already expanded, click on the Request Chevron (>) to expose the properties of the REST request.

Confirm that the Content-Type is defined as application/json

Copy the following JSON into the request’s “Body” definition as an example Payload

{

"features":[

{

"featureType":"TEXT_DETECTION"

}

],

"image":{

"source":"INLINE",

"data":"iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAYAAAAfFcSJAAAADUlEQVR42mP8z/C/HgAGgwJ/lK3Q6wAAAABJRU5ErkJggg=="

},

"compartmentId":"ocid1.compartment.oc1..aaaaaaaxxxxx"

}NOTE: the “data” payload is a base64 encoded sample message. This request needs a base64 payload for the image we want to analyze. The “compartmentId” is the ocid of the compartment where ODA is provisioned.

NOTE 2: This service also allows to provide the image from an Object Storage URL.

NOTE 3: Again, if you need to configure the DocumentAI endpoint the principles are the same.

These instructions are part of a Live Labs Hands-On Lab available here.